Inside DNN "black box" - recognizing an object- towards a "white box" approach, part 1

Neural networks, especially Deep Neural Networks can be treated, using the terminology of cybernetics - as black boxes. But not necessarily always.

Krzysztof Michalik

12/18/20243 min czytać

"Man has learned to create artificial intelligence, but cannot understand how it works – said physics professor X!". Really? AI is science, not magic, Harry Potter style. AI and computer scientists understand how AI works, after all they have been successfully building and designing these systems for decades, but in a strange way some theoretical physicists tell us almost everything, especially what is currently fashionable - almost daily about AI these days - except a good explanation of the nature, what the main purpose of their profession is. I hope that some education and tools can make it easier to understand how "magical" AI really works.

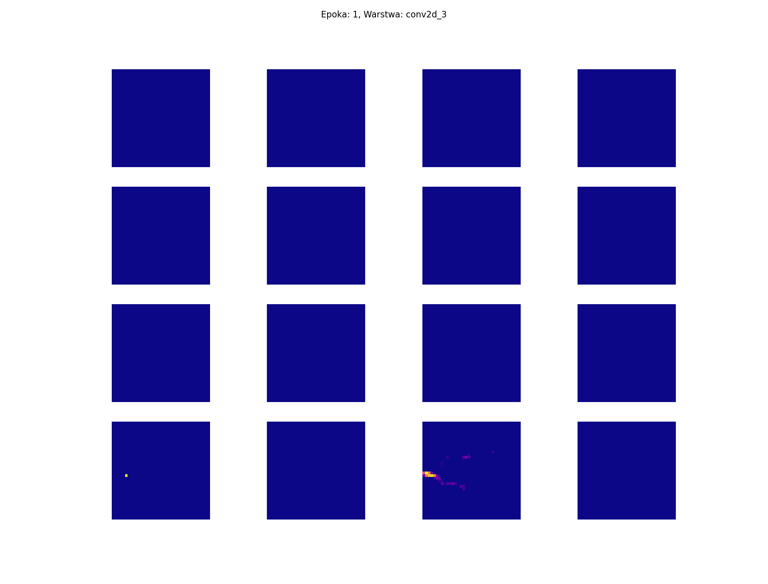

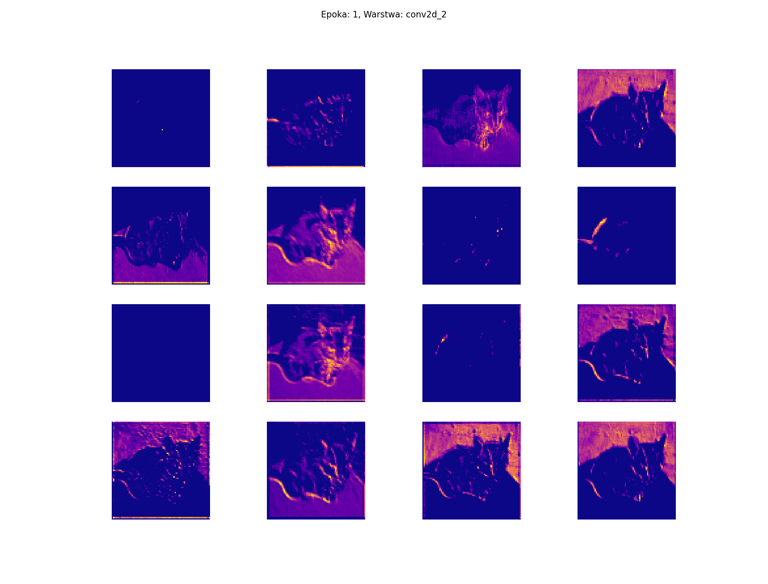

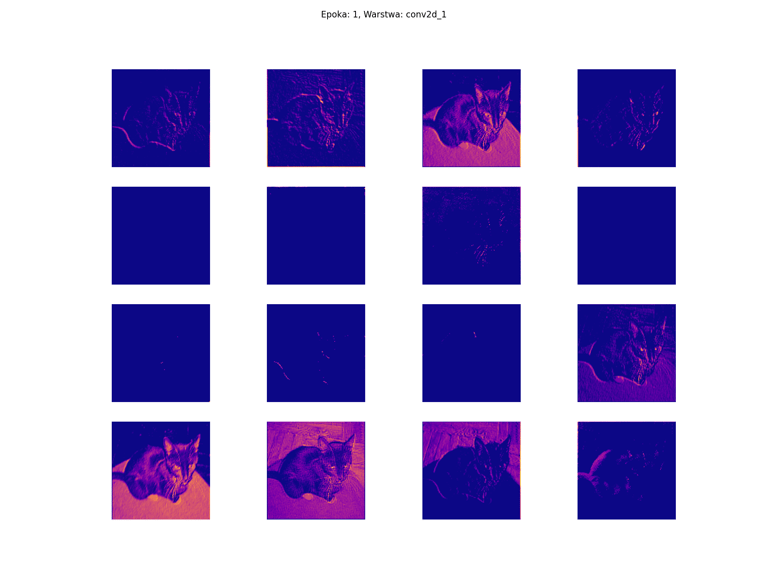

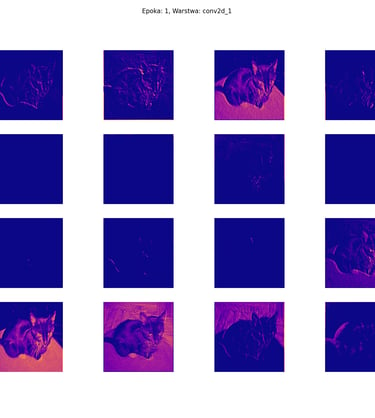

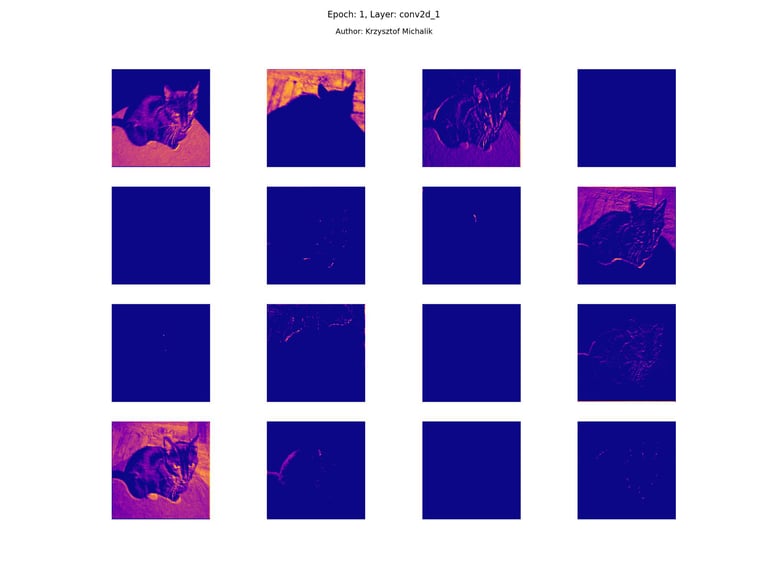

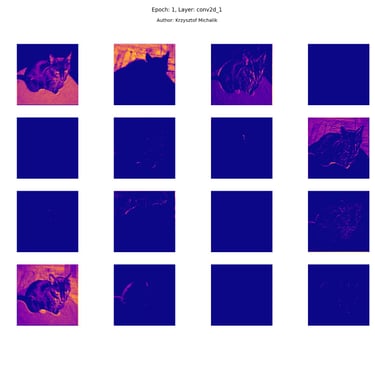

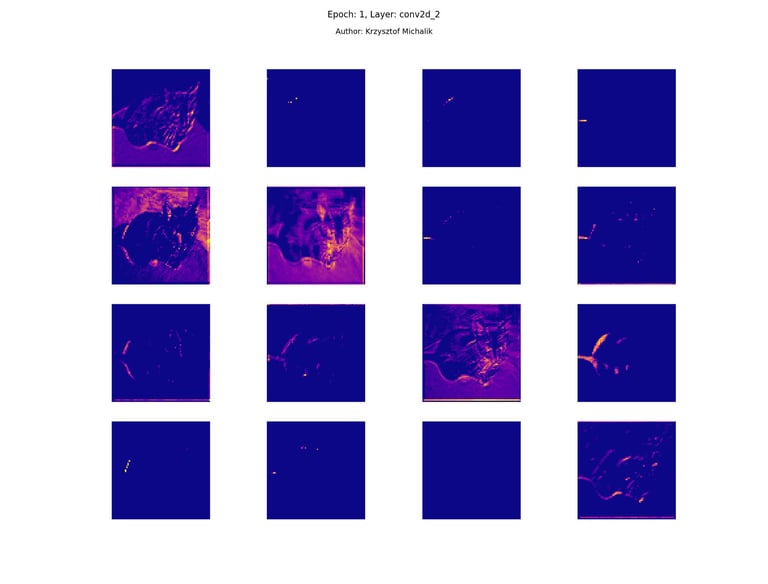

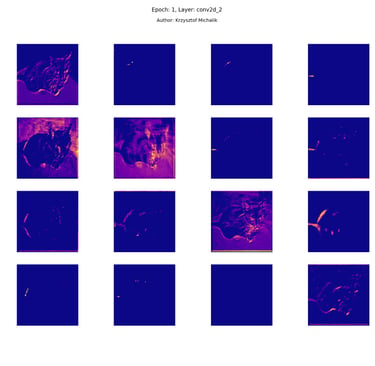

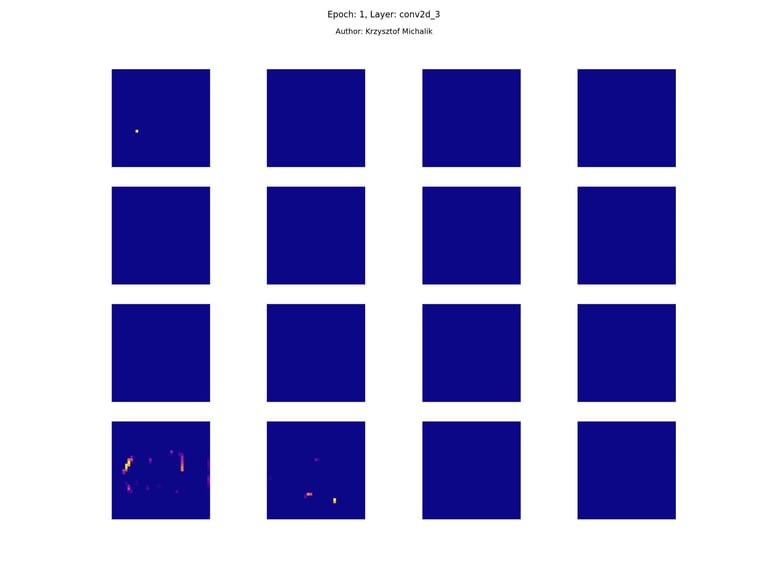

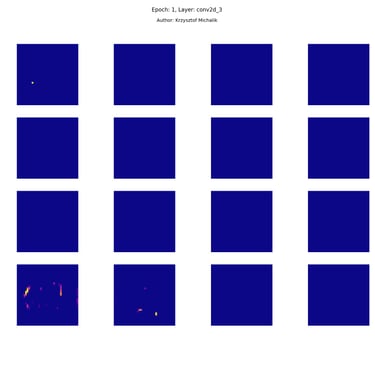

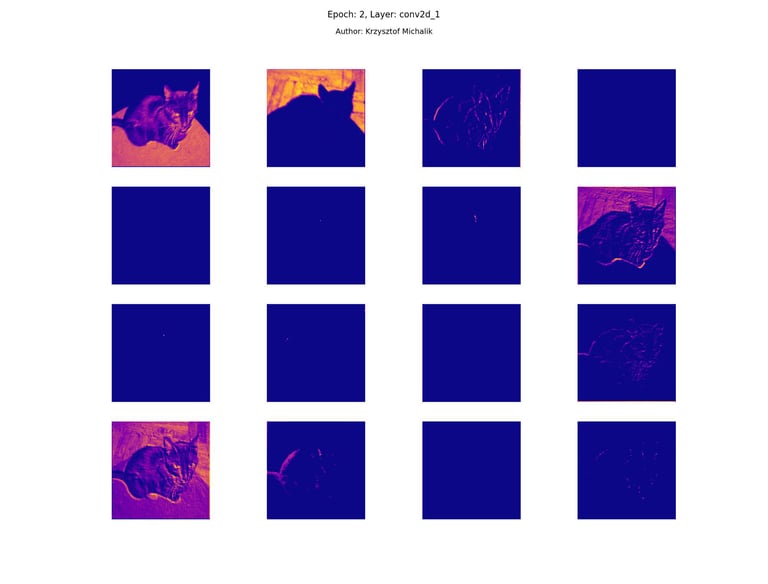

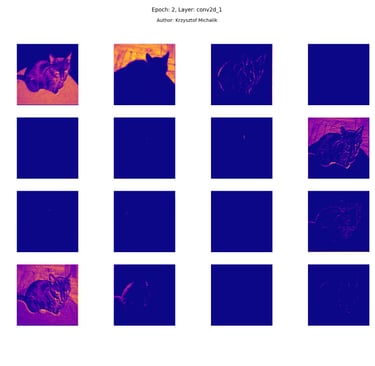

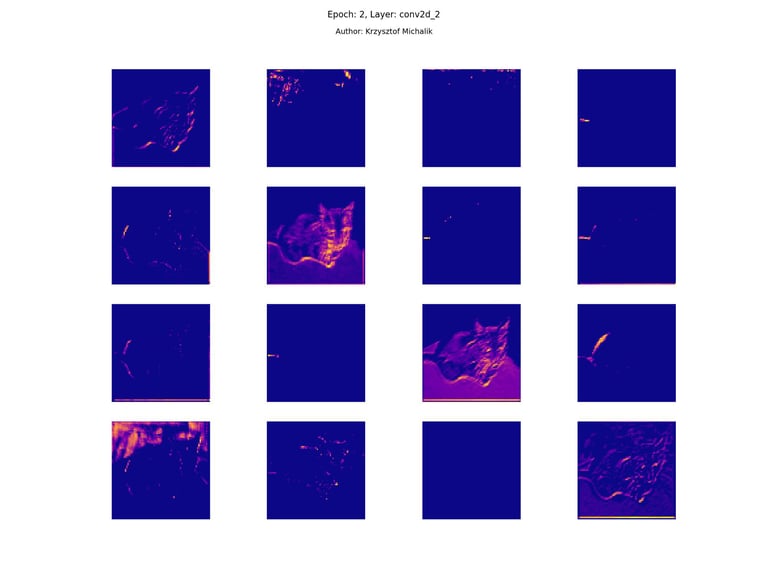

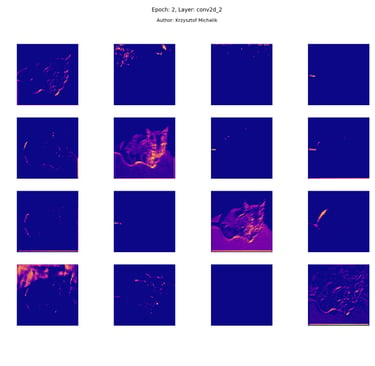

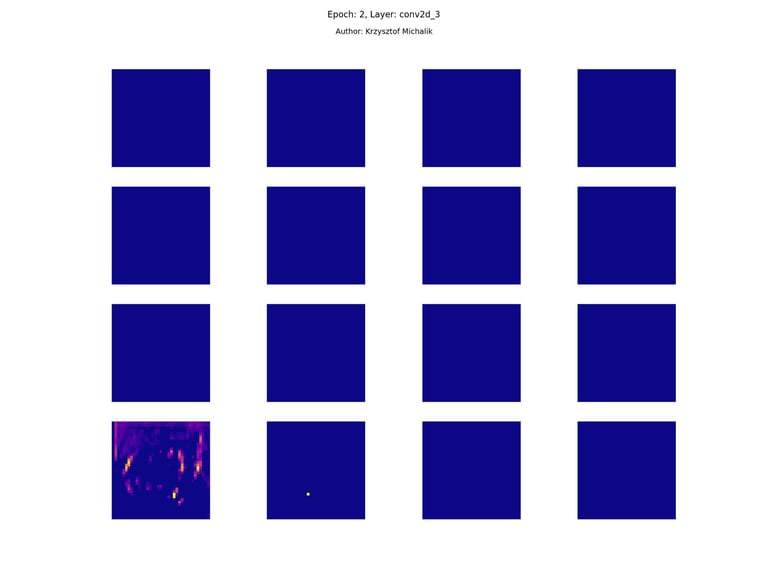

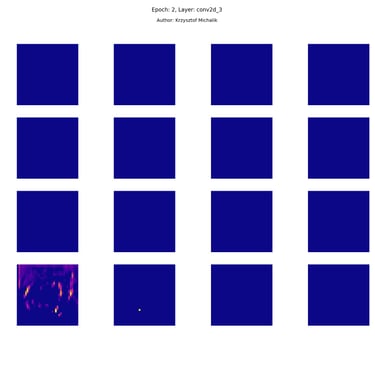

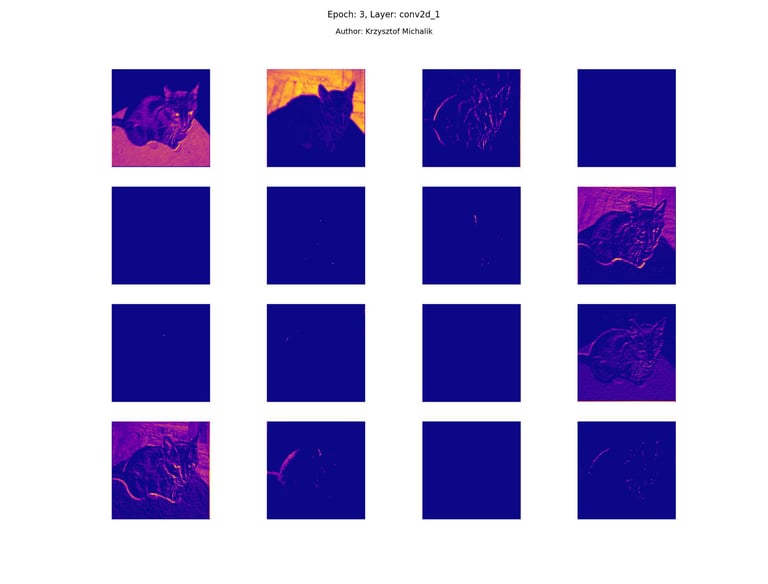

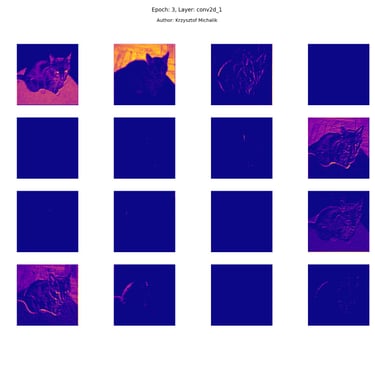

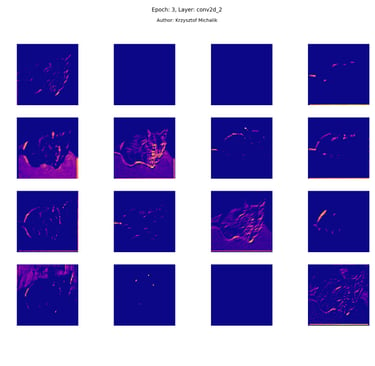

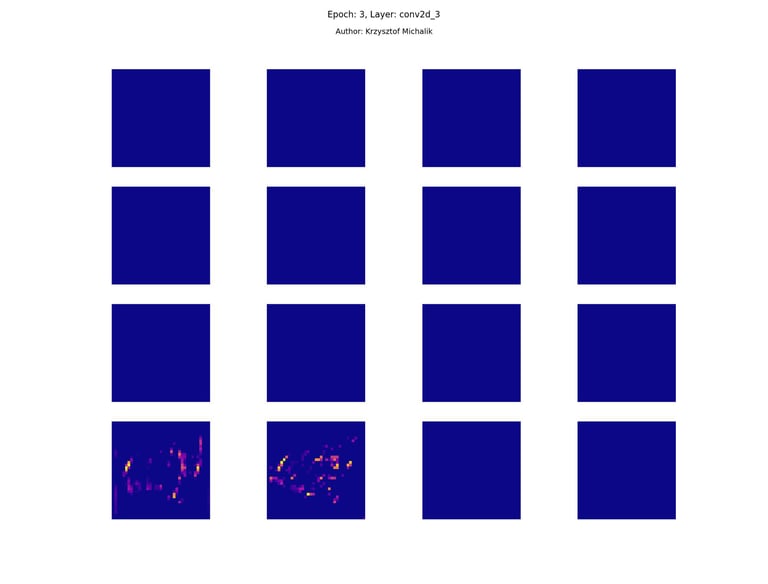

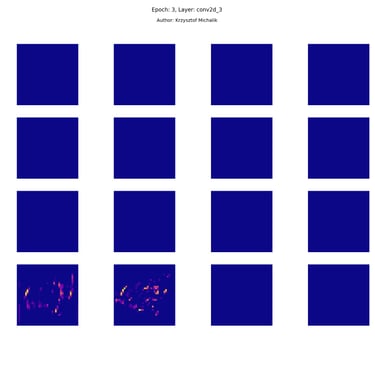

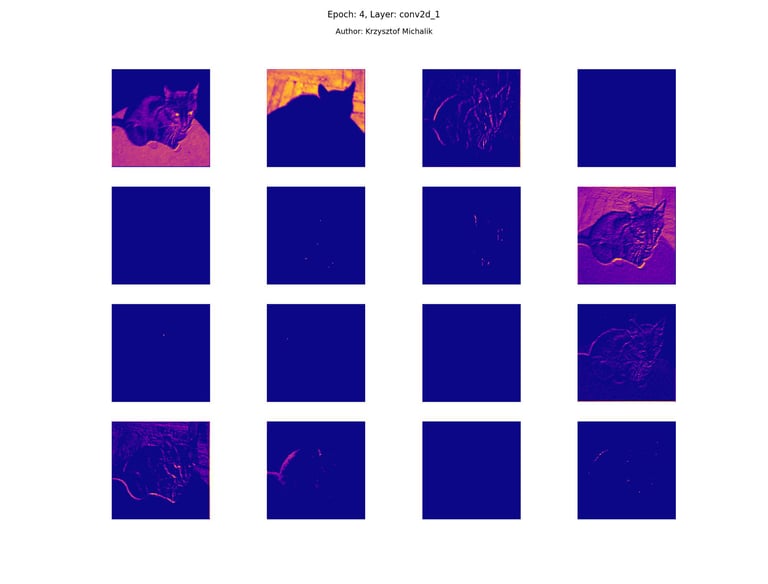

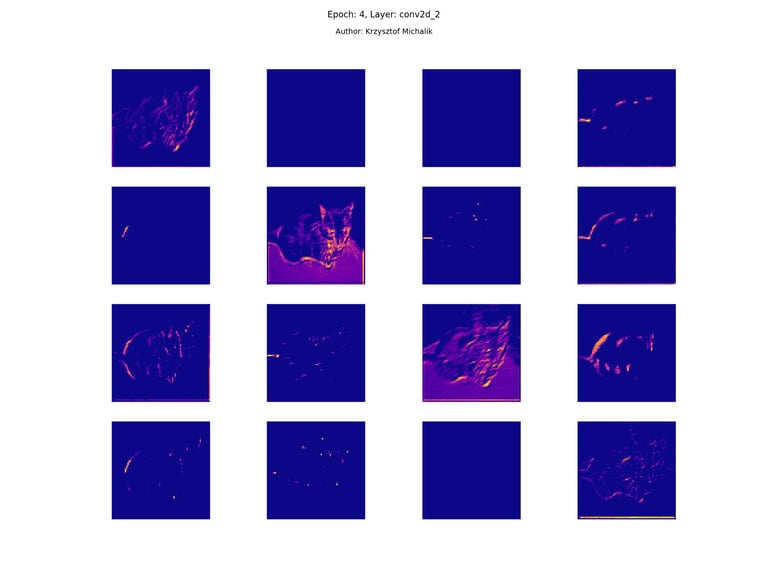

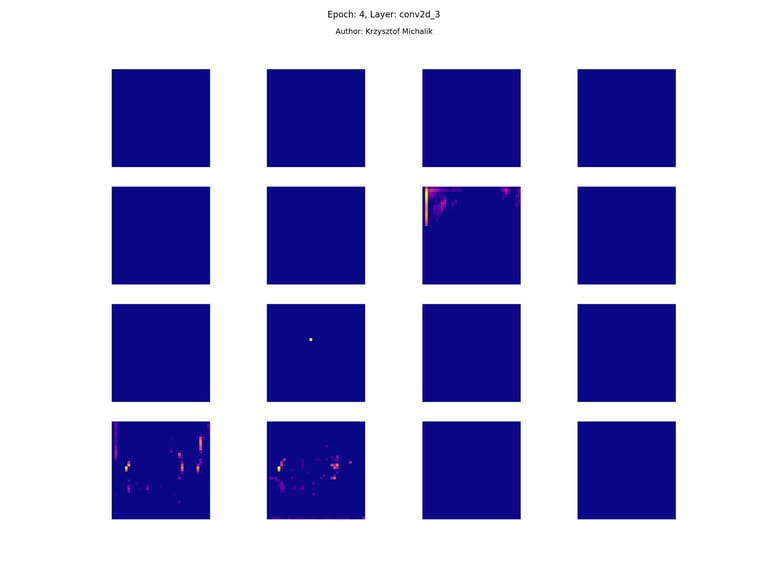

In addition to research on the extraction of logical and symbolic knowledge based on weights, one can have some insight into what is happening inside the processes of undoubtedly enormous computational complexity, related to training DNNs (Deep Neural Networks) to recognize objects for classification or, for example, object detection. The author wrote a program that can also be helpful for didactic, educational, demonstrative and even experimental purposes - to observe how dynamically the appearance of features changes, e.g. in feature maps during network training/training, in response to various changes in its hyperparameters, but also from the transition from one layer to another and as subsequent learning epochs pass.

Below are selected examples of monitoring learning in the internal layers of DNNs, with changes in colors, as well as sampling rates from every 1 epoch to every 10 learning epochs.

Images generated by Author's LogosXAI system (c) 2005-2006 Krzysztof Michalik

An example of different learning with different contrast, which affects the perception of patterns, edges and textures of the analyzed/learned object.